Section: New Results

Interaction and Design for Audiovisual Virtual Environments

Lighting Design for Material Depiction

Participants : Adrien Bousseau, Emmanuelle Chapoulie.

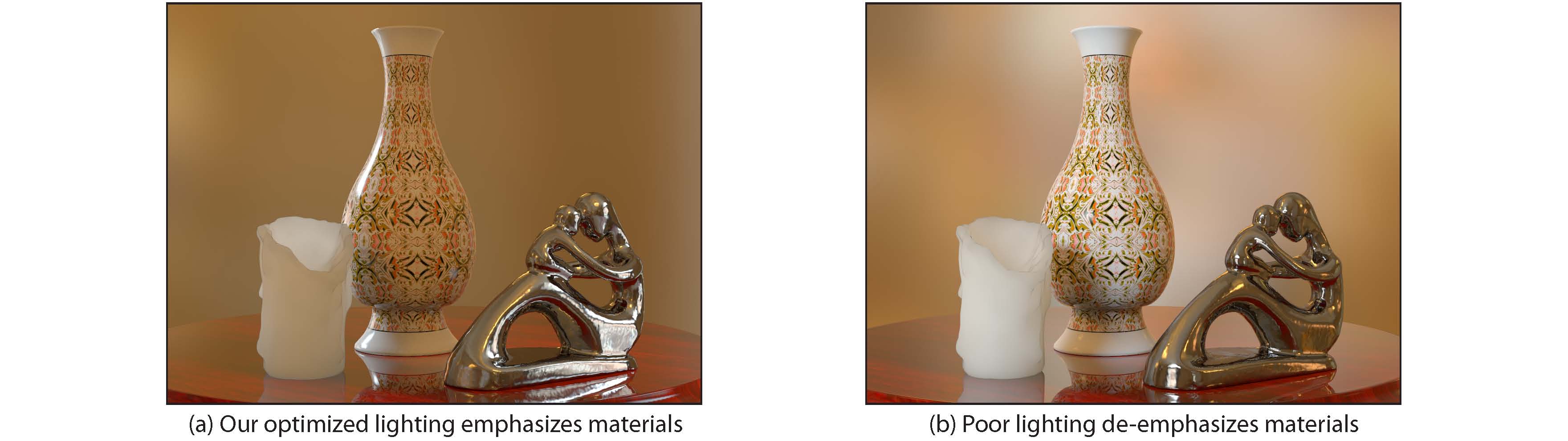

Shading, reflections and refractions are important visual features for understanding the shapes and materials in an image. While well designed lighting configurations can enhance these features and facilitate image perception (Figure 12 b), poor lighting design can lead to misinterpretation of image content (Figure 12 a).

|

We have presented an automated system for optimizing and synthesizing environment maps that enhance the appearance of materials in a scene. We first identified a set of lighting design principles for material depiction. Each principle specifies the distinctive visual features of a material and describes how environment maps can emphasize those features. We then proposed a general optimization framework to solve for the environment map that best fulfill the design principles. Finally we described two techniques for transforming existing photographic environment maps to better emphasize materials. Our approach generates environment maps that enhance the depiction of a variety of materials including glass, metal, plastic, marble and velvet.

This work is a collaboration with Ravi Ramamoorthi and Maneesh Agrawala (UC Berkeley) as part of our CRISP associate team with UC Berkeley. The work was published in the special issue of the journal Computer Graphics Forum, presented at the Eurographics Symposium on Rendering 2011.

A Multimode Immersive Conceptual Design System for Architectural Modeling and Lighting

Participants : Marcio Cabral, Peter Vangorp, Gaurav Chaurasia, Emmanuelle Chapoulie, Martin Hachet, George Drettakis.

We developed a system which allows simple architectural design in immersive environments. The user is able to define the initial conceptual design of the model and can take into account the effects of daylight. Our system allows the manipulation of simple elements such as windows, doors and rooms while the overall model is automatically adjusted to the manipulation. The system runs on a four-sided stereoscopic, head-tracked immersive display. We also provide simple lighting design capabilities, with an abstract representation of sunlight and its effects when shining through a window. Our system provides three different modes of interaction: a miniature-model table mode, a fullscale immersive mode and a combination of table and immersive which we call mixed mode (see Figure 13 ). Our goal is to study direct manipulation for basic 3D modeling in an immersive setting, in the context of conceptual or initial design for architecture.

We performed an initial pilot user test to evaluate the relative merits of each mode for a set of basic tasks such as resizing and moving windows or walls, and a basic light-matching task. The study indicates that users appreciated the immersive nature of the system, and found interaction to be natural and pleasant. In addition, the results indicate that the mean performance times seem quite similar in the different modes, opening up the possibility for their combined usage for effective immersive modeling systems for novice users.

Walking in a Cube: Novel Metaphors for Safely Navigating Large Virtual Environments in Restricted Real Workspaces

Participants : Peter Vangorp, Emmanuelle Chapoulie, George Drettakis.

Immersive spaces such as 4-sided displays with stereo viewing and high-quality tracking provide a very engaging and realistic virtual experience. However, walking is inherently limited by the restricted physical space, both due to the screens (limited translation) and the missing back screen (limited rotation). We propose three novel navigation techniques that have three concurrent goals: keep the user safe from reaching the translational and rotational boundaries; increase the amount of real walking; and finally, provide a more enjoyable and ecological interaction paradigm compared to traditional controller-based approaches. We notably introduce the “Virtual Companion”, which uses a small bird to guide the user through VEs larger than the physical space. We evaluate the three new techniques through a user study with pointing and path following tasks. The study provides insight into the relative strengths of each new technique for the three aforementioned goals.

This work is a collaboration with Gabriel Cirio, Maud Marchal, and Anatole Lécuyer (VR4I / INRIA Rennes) in the context of ARC NIEVE (Section 7.2.1 ) and has been accepted for publication [17] .

Inferring Normals Over Design Sketches

Participant : Adrien Bousseau.

We are currently working on a sketch-based tool to infer normals over a 2D drawing. Our tool should allow users to apply realistic and non-photorealistic shading over the drawing, with applications in product design.

This work is a collaboration with Alla Sheffer (University of British Columbia), Cloud Shao and Karan Singh (University of Toronto).

Using natural gestures into virtual reality immersive space

Participants : Emmanuelle Chapoulie, George Drettakis.

We are studying the use of gestures which are as natural as possible in a context of virtual reality environments. We define a scenario which is a sequence of tasks (hiding, finding, pushing, pulling, grabbing, picking up, putting down objects) that the users will perform with hands and with wands, in order to evaluate the usability of our interaction approach. Each task will be used to evaluate a specific criterion.